For the modern enterprise, the "front door" of customer and employee service is undergoing a fundamental architectural shift. For years, the Interactive Voice Response (IVR) system has been the gatekeeper—a rigid, reliable, but often frustrating sentry. However, as organizations strive to meet the rising expectations of a digital-first workforce and consumer base, the conversation has moved beyond simple touch-tone menus toward the fluid, non-deterministic world of Generative AI and Large Language Models (LLMs).

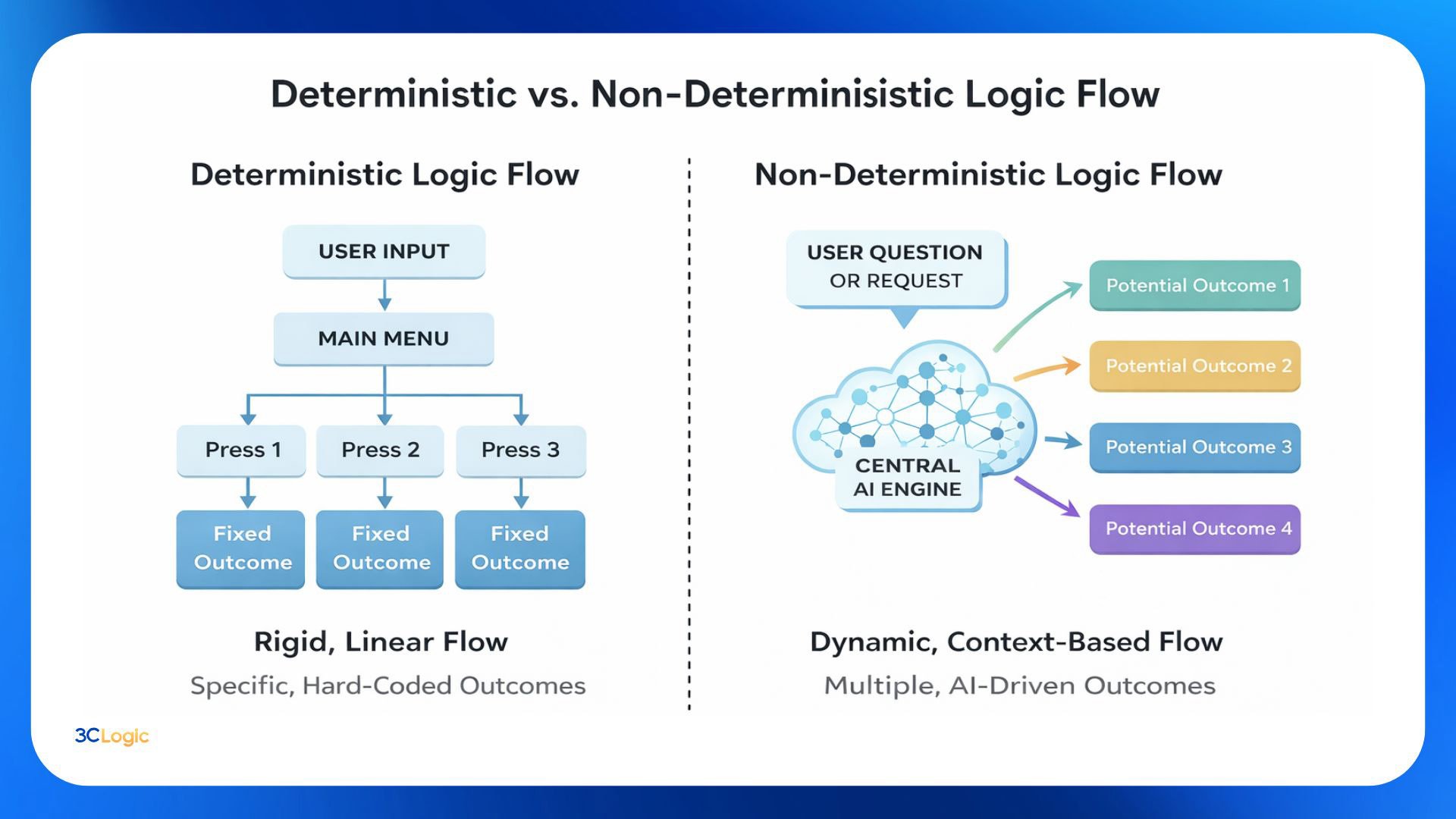

For Customer Experience (CX) executives, IT Service Desk Managers, and platform owners, the challenge is no longer just about "automation." It is about understanding the structural difference between deterministic systems (where every input has a single, pre-defined path) and non-deterministic systems (where the AI interprets intent and formulates a unique response).

Balancing these two approaches is critical for optimizing First Contact Resolution (FCR) while maintaining the governance and predictability that enterprise operations demand.

1. The Deterministic Era: The Logic of the Menu

A deterministic system, typical of traditional IVR and basic Natural Language Understanding (NLU) engines, operates on a "finite state" logic. If a user presses "1" or says "Check Status," the system follows a hard-coded branch.

The Pros: Predictability and Compliance

In highly regulated industries or specific IT workflows (for example: password resets, simple status updates, etc.) determinism is a feature, not a bug.

- Total Control: every word the system speaks is pre-approved by legal, compliance, or brand teams.

- Predictable Routing: there is zero "hallucination" risk. The system cannot go off-script because there is no script-less path.

- Lower Compute Costs: deterministic logic requires minimal processing power compared to real-time LLM inference.

The Cons: The "Dead-End" Experience

The rigidity that provides control also creates friction. Market trends in 2024 and 2025 consistently show that traditional IVRs remain a primary source of customer dissatisfaction due to "menu fatigue" and the inability to handle "long-tail" queries—questions that don't fit into the pre-defined categories.

- Limited Intent Discovery: if a user’s problem is complex (e.g., "I need to dispute a charge but I also lost my card and I'm traveling"), a deterministic IVR often fails, forcing a high-cost transfer to a human agent.

- High Maintenance: every new product or service requires manual flow updates, creating a bottleneck for IT and systems of record administrators (e.g., ServiceNow owners).

2. The Non-Deterministic Frontier: Voice AI and Intent

Non-deterministic Voice AI, powered by Generative AI and advanced LLMs, does not rely on a flowchart. Instead, it uses probabilistic modeling to determine the best response based on the context of the conversation.

The Pros: Human-Centric Flow and Efficiency

- Intent Recognition Accuracy: industry analysis highlights that the move from keyword-based NLU to LLM-based interpretation has significantly closed the gap in how machines understand human speech, allowing users to speak naturally.

- Context Retention: non-deterministic systems can remember information from the start of the call and apply it later, eliminating the need for callers to repeat themselves.

- Reduced Development Cycles: instead of building hundreds of branches for every possible question, administrators can point an AI agent toward a verified knowledge base (KB) to generate responses dynamically.

The Cons: The Governance Challenge

- Probabilistic Risk: since the system is non-deterministic, it may phrase things differently each time. This creates "black box" concerns for compliance.

- Integration Complexity: to be truly effective, Voice AI must be deeply integrated with the system of record (e.g.: ITSM, HRSD, or CRM platforms) to pull real-time data, requiring robust API management.

The "Logic Flow" Comparison (Deterministic vs. Non-Deterministic)

3. Grounding the Conversation: The Power of RAG for Voice AI

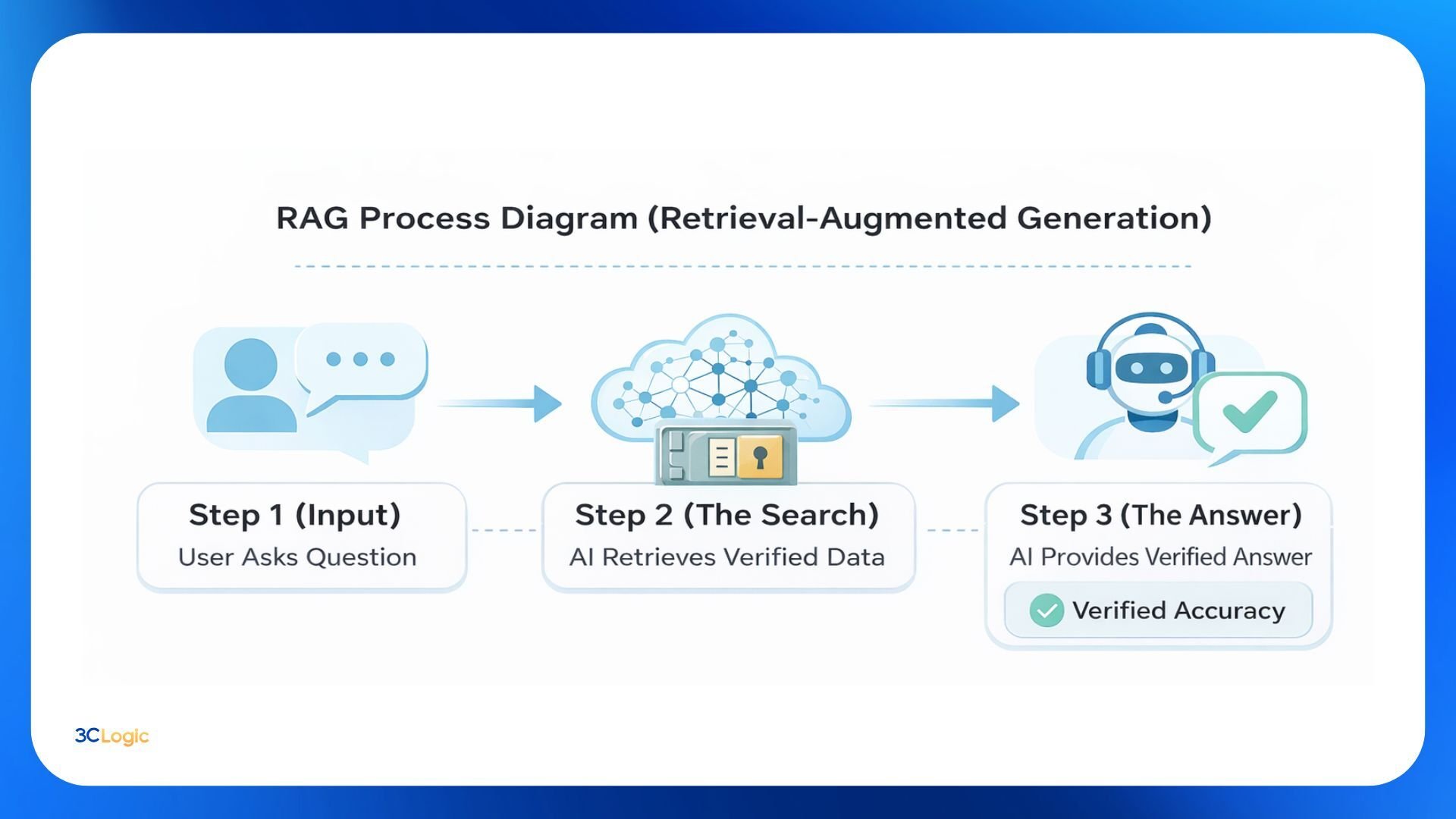

While non-deterministic AI provides the "conversational engine," enterprise leaders often worry about the AI "hallucinating" or providing outdated information. This is where Retrieval-Augmented Generation (RAG) becomes essential.

RAG is a framework that forces the AI to look up specific, authorized information from your internal systems before it speaks. Instead of relying solely on its internal training data, the AI retrieves a relevant document, such as a specific HR policy or a troubleshooting guide from a system of record (e.g.: ServiceNow) or native knowledge store, and leverages that as the sole basis for its response.

Why RAG is a Game-Changer for Voice:

- Factual Accuracy: it ensures the AI doesn't "invent" a policy or a technical workaround. It only says what is in your verified Knowledge Base.

- Real-Time Updates: if you update a service price or a software version in your system of record, the Voice AI "knows" it instantly without needing to be retrained.

- Security & Permissions: RAG can be configured to only retrieve information the caller is authorized to see, ensuring data privacy is maintained throughout the voice interaction.

The Power of RAG for Voice AI

4. The "Hybrid" Solution: Weaving Both Worlds Together

The most sophisticated enterprise architectures do not choose between deterministic and non-deterministic; they weave them together to compensate for each other's shortfalls.

Deterministic Guardrails for Non-Deterministic AI

To solve the "black box" problem of AI, leaders are implementing deterministic "wrappers." For example, while the AI may non-deterministically troubleshoot a technical issue, it can be forced into a deterministic path when it reaches a high-risk moment, such as a legal disclaimer or a financial transaction approval.

Non-Deterministic "Fallback" for IVR

Conversely, when a user reaches the end of a deterministic IVR menu without finding their option, instead of a "dead-end" transfer to an agent, a non-deterministic AI can take over to "catch" the intent and resolve the issue using the company's knowledge base.

5. Comparative Analysis: IVR vs. Voice AI

| Feature | Deterministic (IVR) | Non-Deterministic (Voice AI) |

| Logic Foundation | Hard-coded rules and trees | Probabilistic LLMs and context |

| User Experience | Transactional, rigid, menu-driven | Conversational, fluid, empathetic |

| Scalability | Low (requires manual flow building) | High (learns from knowledge bases) |

| Error Handling | "I didn't catch that, please press 1" | "I understand you're frustrated, let me find that" |

| Best Use Case | High-security, simple transactions | Complex troubleshooting and Tier 1 support |

6. Strategic Implications for the Enterprise

For the VP of CX and the IT Service Desk Manager, the goal is orchestration.

The System of Record Advantage

Whether using a CRM or an ITSM platform (e.g. ServiceNow, etc.) the shift to non-deterministic AI represents a massive opportunity to deflect high-volume tickets.

- Knowledge as Logic: in a non-deterministic world, your Knowledge Base (KB) is your logic. If your documentation is poor, the AI's response will be equally poor.

- Unified Data Layers: Voice AI performs best when it has access to the user's history (e.g., recent hardware requests in ServiceNow). Ensuring the voice layer and the system of record are in sync is a 2026 priority for most IT leaders.

Governance and Human-in-the-Loop (HITL)

Enterprise leaders cannot ignore the risks of non-deterministic output. Recent industry surveys indicate that AI trust and safety are now top priorities for CIOs.

- Guardrails: implementing "deterministic guardrails" around a non-deterministic engine—such as pre-defined "hard stops" for legal disclaimers—allows for the best of both worlds.

- Human-in-the-Loop (HITL): high-risk decisions should always be routed back to a deterministic path or a human agent to ensure compliance.

7. Best Practices for Implementation

To successfully navigate this transition, enterprise organizations should follow a phased roadmap:

- Audit the "Hang-Up" Points: use interaction analytics to identify where users are abandoning your current deterministic IVR. These friction points are the primary candidates for Voice AI.

- Start with "Hybrid" Flows: use a deterministic greeting (e.g., "Press 1 for Support, 2 for Sales") to route the call, then switch to a non-deterministic Voice AI agent to handle the specific inquiry within that category.

- Prioritize Integration over Intelligence: a "smart" AI that cannot check a ticket status in a system of record is less valuable than a "simple" AI that can. Ensure your Voice AI partner has deep, native integration with your ITSM or CRM platform.

- Monitor "Drift" and Accuracy: unlike an IVR, which you can "set and forget," Voice AI requires ongoing monitoring to ensure its non-deterministic responses remain aligned with corporate policy.

8. Measuring Success: Moving Beyond Containment

In the era of Voice AI, "containment rate" is an insufficient metric. Newer KPIs to consider include:

- Resolution Accuracy: Did the AI provide the correct experience or answer?

- Sentiment Shift: Did the caller’s tone improve from the beginning of the call to the end?

- Downstream Ticket Volume: Does the AI interaction lead to a reopened ticket within 24 hours?

Conclusion: The Balanced Architecture

The future of the enterprise is not purely non-deterministic. There will always be a place for the certainty of a "Press 1" menu in specific contexts. However, the competitive advantage lies in the ability to layer Voice AI over these systems to create a more human, efficient, and scalable experience.

In 2026 and beyond, the distinction between "calling a company" and "talking to a company" will disappear. Organizations that fail to evolve their deterministic legacy systems risk being left behind by a workforce and customer base that no longer has the patience for a "one-size-fits-all" menu.

Next Steps for Leadership:

- Assess current state: conduct a friction audit of your current IVR.

- Identify the platform owner: ensure your system of record or CRM platform owner is involved in the Voice AI strategy to align data and knowledge.

- Evaluate the tech stack: check if your telephony provider can support LLM-based integrations without a total "rip and replace."